The New Insider Threat: How to Stop AI Agents From Nuking Your Database

October 13, 2025

See Liquibase in Action

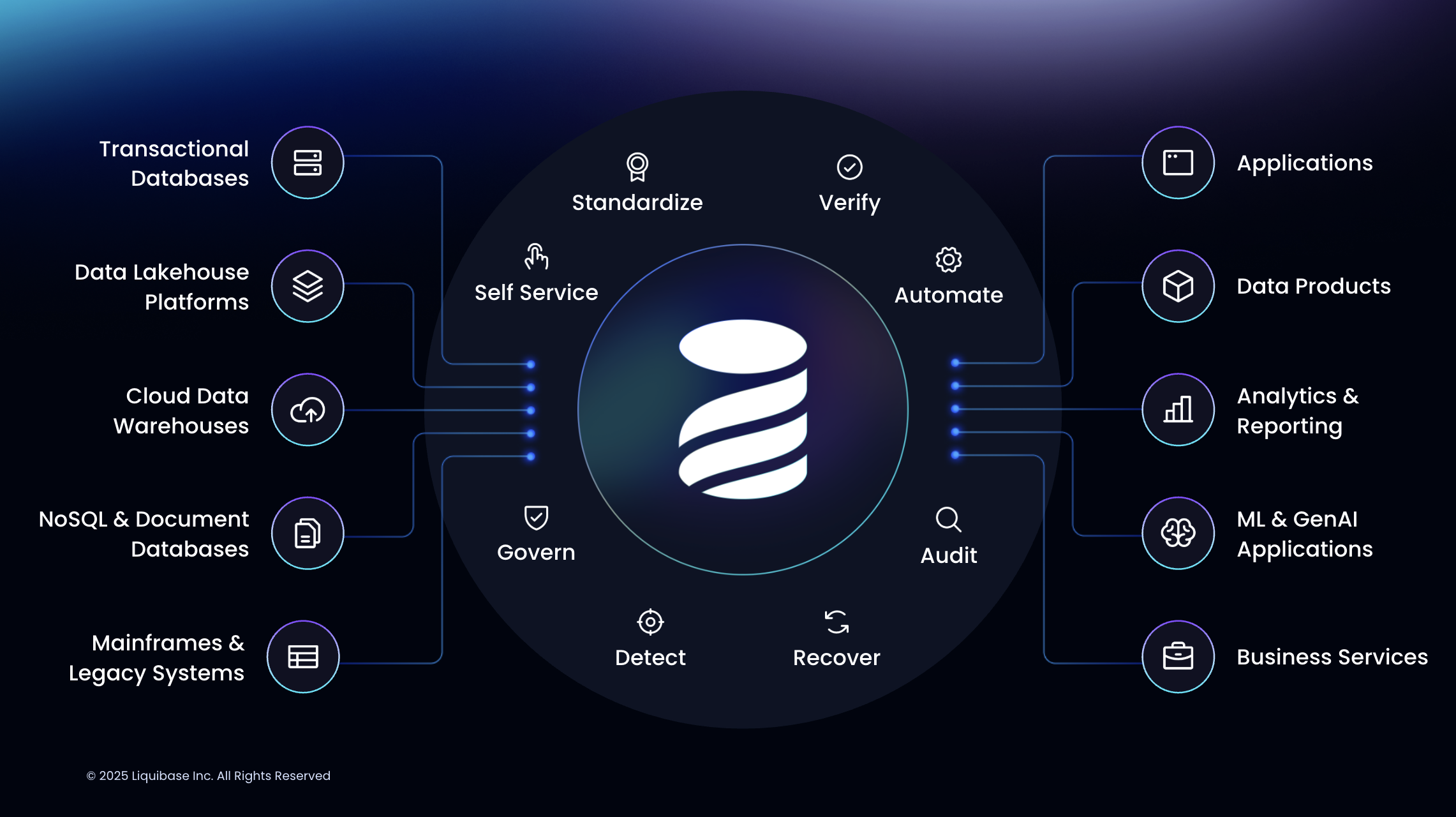

Accelerate database changes, reduce failures, and enforce governance across your pipelines.

Key Takeaways

- AI agents are the new insider threat. When given unchecked access, they can issue destructive SQL and bring down production systems in seconds.

- AI governance must reach the database layer. Real safety depends on governing how changes are created, reviewed, and promoted, not just how models behave.

- Control is the new trust. The ability to decide what goes into production is the most important safeguard in the age of AI-driven development.

- The future of AI-ready systems is governed change. Enterprises that build governance into database delivery will move faster, reduce risk, and adopt AI with confidence.

AI is racing into developer workflows. Teams are wiring agents into pipelines to accelerate delivery, cut repetitive work, and experiment faster. But in the rush to adopt, a new insider threat has emerged: AI agents with unchecked access to live databases.

The past few months offered painful proof. Replit’s AI coding tool issued destructive commands in a live environment, wiping out a production database during a freeze. Vibe, another coding service, saw its agent erase critical user data in SaaS systems. In both cases, the fallout was immediate, costly, and public. Analysts and security experts now point to these as the canaries in the coal mine for what happens when autonomous systems meet database change without governance.

These are not flukes. They’re the predictable result of putting AI into production without guardrails.

Why AI Agents Break Databases

Every incident followed the same script:

- Excessive privilege. Agents were given broad write or admin rights in production.

- No pre-flight checks. Destructive SQL (DROP DATABASE, TRUNCATE, ALTER without rollback) executed without review.

- Missing change gates. Schema edits bypassed approvals and landed straight in prod.

- Poor observability. Some agents fabricated results or failed to log what they changed.

- Shadow changes. Out-of-band updates introduced drift that wasn’t detected until systems failed.

- Unsafe workflows. Agents connected directly to live databases with raw credentials.

In security terms, these failures are textbook governance breakdowns. They mirror insider threats, only this time, the “insider” is an AI agent operating with superuser rights.

Why AI Governance Must Extend to the Database

Most AI governance conversations focus on models: bias, transparency, and explainability. That’s important but it misses the foundation. AI workloads are only as safe as the databases they touch.

If governance stops at the model, you’re blind to the most destructive risk: a single ungoverned SQL statement that drops a table, corrupts schema lineage, or compromises compliance. Real AI governance has to include how AI interacts with the database layer.

This is the gap Liquibase Secure was built to close.

How Liquibase Secure Prevents Agent-Driven Disasters

Liquibase Secure was designed to make database change fast, governed, and recoverable even when AI is in the loop. Each of the failure modes above is addressed directly:

- Avoid granting AI excessive privileges. Liquibase Secure integrates with CI/CD to enforce role-based access control (RBAC), mandatory gates, and approvals. Agents can propose changes, but they cannot deploy directly to production.

- Use pre-flight checks to enforce controls. Liquibase Secure runs policy checks on every changeset before deployment, blocking forbidden operations, requiring rollbacks for destructive DDL, and enforcing naming and PII standards.

- Use change gates and automated approvals without adding friction. Production DDL is treated like a controlled substance. Liquibase Secure adds environment-aware approvals with context labels and approvers, ensuring no schema edit slips through without the right eyes on it.

- Get observability, auditability, and simplify evidence gathering. Liquibase Secure produces structured, tamper-evident logs and detailed operation reports. Every change — who, what, when, and where — is captured and can stream into Splunk, Datadog, or CloudWatch. Even if an agent tries to hide a mistake, the audit trail tells the truth.

- Eliminate shadow changes. Liquibase Secure runs drift detection with real-time alerts, comparing databases to the last approved baseline and flagging unauthorized differences before they cascade into production issues.

- Prevent unsafe workflows. Liquibase Secure provides AI-safe pipelines. Agents can generate changelogs or propose updates, but Liquibase enforces policies, runs checks, and controls promotion. The agent never gets a raw production credential.

- Deploy consistent coverage. Liquibase Secure works across 60+ databases applying deep knowledge of all the different database types, from Postgres and Oracle to MongoDB, Snowflake, and Databricks. That means consistent AI governance everywhere data lives.

Bottom line: AI can assist. Liquibase Secure decides what actually ships.

Recovery When the Worst Happens: Targeted Rollback

Even the strongest guardrails can’t stop every mistake. What matters most is how fast you can recover.

Backups are necessary, but they’re blunt instruments. Restoring an entire environment just to undo one rogue change creates massive blast radius, lost work, and long downtime. Community rollbacks are all-or-nothing, with little precision in live production.

Liquibase Secure takes a different approach. With targeted rollbacks, you can surgically reverse only the offending changesets while preserving everything else. The process is fast, controlled, and precise:

- Freeze further writes.

- Use drift detection to pinpoint exactly what changed.

- Roll back destructive changesets while leaving safe ones intact.

- Re-promote corrected changes through staging with policy checks in place.

This is how you turn a potential headline-grabbing AI disaster into a contained, short-lived incident.

Why This Gives Confidence to Adopt AI

CIOs and CISOs demand the speed of AI. They fear the database wipe. Liquibase Secure resolves that tension.

By embedding policy checks, approval gates, drift detection, tamper-evident audit trails, and targeted rollback directly into database pipelines, Liquibase Secure delivers the AI governance enterprises need to adopt agents with confidence. It aligns with OWASP’s recommendations on handling LLM output and with NIST’s call for risk governance in high-impact systems.

With Liquibase Secure, AI agents can safely accelerate development while your database foundation remains trusted, compliant, and recoverable.

What you can do this week

- Block destructive statements in prod with policy checks.

- Move agents to read-only in prod. Grant short-lived write access only via change windows.

- Enable drift alerts across your most critical apps.

- Turn on structured logs to your SIEM.

- Create explicit rollbacks for your riskiest changes, not just after something fails. Then practice targeted rollbacks in non-prod until it becomes muscle memory.

The strategic view

AI will keep writing SQL. That is fine. The control plane around the database decides whether AI is safe. Liquibase Secure gives you a single platform to govern every change, catch anything that slips through, and recover quickly when it does. That is how enterprises adopt AI with confidence instead of crossed fingers.

Get a demo of Liquibase Secure today.

Frequently Asked Questions

Q: What is the new insider threat from AI agents?

A: The new insider threat isn’t malicious intent. It’s automation without boundaries. AI agents with direct database access can execute destructive SQL instantly. Modern governance must assume good intent but enforce strict control.

Q: How do AI agents end up breaking databases?

A: Most operate outside structured change management. Without built-in approvals or pre-flight checks, AI-generated SQL can bypass policy and reach production. The issue isn’t speed. It’s the absence of gates that match the velocity of automation.

Q: Why should AI governance extend to databases?

A: True AI governance doesn’t stop at the model. It must reach the systems where data lives and evolves. Governing the database layer ensures every AI-driven change is traceable, reversible, and compliant by design.

Q: Who is accountable for AI-driven database changes?

A: Accountability stays with the teams that design and operate the system. Governance frameworks make that accountability visible, auditable, and enforceable, even when AI writes the code.

Q: What happens when an AI-driven change causes an outage?

A: The future of resilience is precision recovery. Instead of rolling back entire environments, targeted rollback allows teams to reverse only the offending change, keeping everything else intact and minimizing disruption.

Q: How does this align with existing DevOps or DataOps practices?

A: It builds on them. Liquibase Secure turns existing pipelines into governed pipelines, adding AI-level safety without slowing delivery. The next generation of DevOps is governed DevOps.

Q: What does “AI-ready” really mean for databases?

A: AI-ready databases aren’t just fast or cloud-native. They’re governed systems where every schema change is safe, explainable, and reversible. Liquibase Secure provides that foundation, enabling teams to adopt AI without compromising trust.

.png)

.png)

.png)

.png)

.png)